Eating and drinking is an essential part of everyday life. And yet, many people around the world today rely on others to feed them. In the original work on the RAFv2 project, they presented a prototype robot-assisted self-feeding system for individuals with movement disorders. The system was capable of perceiving, localizing, grasping, and delivering food items to an individual.

A system such as RAFv2 requires collaboration with individuals with movement disorders to gain vast amounts of feedback on the existing capabilities. This would require demonstrations over a virtual call or a user test with a person trying out the system in-person. These methods can be difficult to show the user the capabilities of the system or costly to perform to gain that valuable feedback needed for future iterations. In this work, we present an online simulation of the RAFv2 system intended to be used to gauge user interest on the current features of the system and obtain feedback on the current state. This method would allow the user to gain a clearer picture of how the RAFv2 system would work and allow them to make informed suggestions on the project.

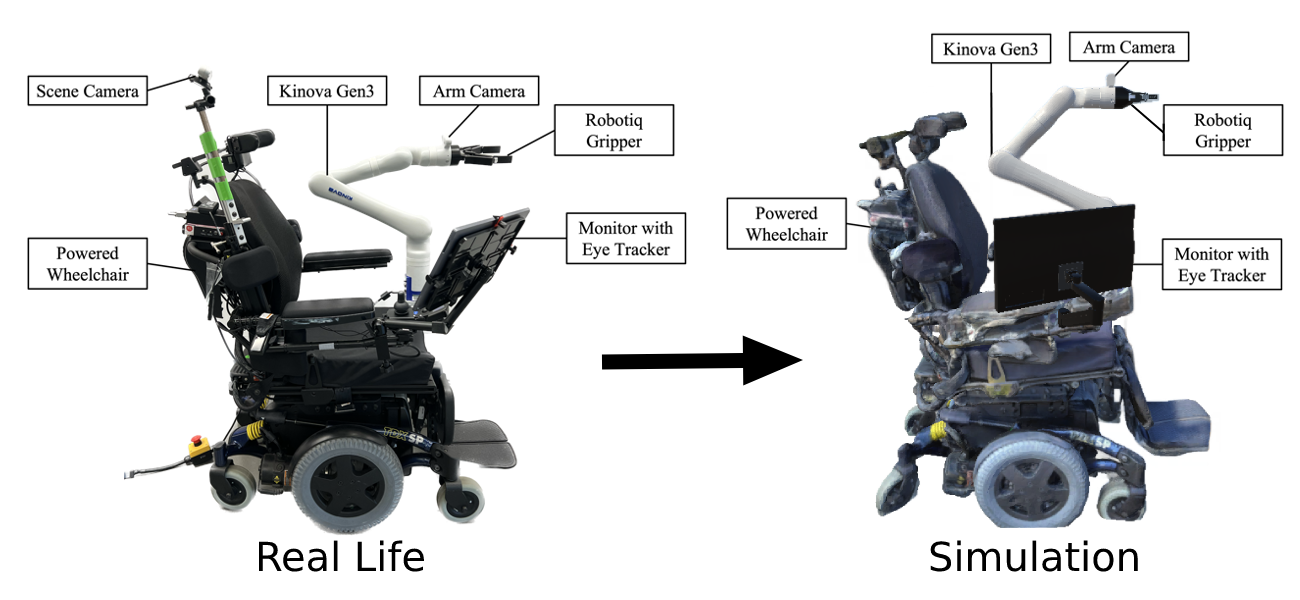

We utilized Unity3D engine to make a simulated representation of the RAFv2 system. The simulation shows the different control modes available to the user as well as show how they operate. The main premise of the RAFv2 system is its ability to allow the user to choose a food object using a graphical user interface and bring that object to the user for consumption. This has been implemented in the simulation using its guided control to show how robot would operate in real life if the user chose that action. There other control modes implemented are cartesian mode, where the robot moves according to an x, y, and z plane, and joint mode, where the user can control each individual joint.

More information on the RAFv2 system can be seen at this link.

Troubleshooting: